Category: Quality Assurance

Setting Expectations – Lessons From a Little League Umpire

I’ve found through the years that projects go more smoothly when expectations are set at the beginning. Whether I’m in a Business Analyst or Quality Assurance role, I’ve found that the project goes more smoothly when the processes are laid out clearly up-front and the known limitations of the project are called out and addressed before the project starts. When people are not aware of the time that they need to dedicate to the project or the limits of the project scope until the project is well underway, they can get quite upset.

My most memorable lesson on the importance of setting expectations is still the one I learned while umpiring little league in high school.

Strike One!

I umpired my first game when I was 14 years old. The league was a city-run little league program, and some of the divisions had kids as old as me. Other than watching a lot of baseball, the only preparation the city gave me was handing me an armful of equipment and a copy of the rulebook. I read the rulebook several times and felt that I was as ready as I could be.

I set up the bases and put on my equipment for the first game. I said hi to the two managers and nervously took my spot behind the plate. My calls were a little shaky, but I was surviving. Surviving until the third batter, that is.

I lost my concentration on a pitch and uncertainly called, “Strike?” The batter’s manager yelled at me, “How can that be a strike?!? It hit the dirt before it even crossed the plate!”

I sheepishly said, “It did? I guess it was a ball then.”

Needless to say, the managers, players, and parents argued with me vehemently on nearly every call after that. I wasn’t sure I’d ever want umpire another game. However, the manager of the umpires was desperate for warm bodies to call the games and convinced me to finish out the season. I never had a game as bad as the first one, but every game was stressful and the managers, parents, and players argued with me regularly.

Year Two – Setting Expectations

In the next year, I read a book called “Strike Two” by former Major League umpire Ron Luciano. His stories about handling some of the toughest personalities in baseball gave me the insight and confidence that I needed to try umpiring again. The difference this year was that I was going to set some expectations before every game.

I met with both managers before every game and explained the following:

- I’m the only umpire, and I only see each play once. Don’t bother arguing any judgment call such as ball vs strike or safe vs out. I won’t change my call.

- I call a big strike zone. If any part of the ball is over any part of the plate from the top of the batter’s shoulders to the bottom of their knees, I’m calling it a strike.

- I’m not a professional umpire. I may make an error in the rules. If either manager believes that I made an error in the rules, let me know. I will call a time-out and meet with both managers to review the rule book and make an adjustment to the call if warranted.

Taking the time to set these expectations made a huge difference in my ability to manage the game. The managers would tell their players that I have a big strike zone and that arguing with me was pointless. The players swung a lot more, put the ball in play a lot more, and I got very few arguments.

In addition, after the games, parents would tell me that it was one of the best games they went to all year, especially for the younger age groups. Apparently, in many other games, the batters for each team would simply draw walks until they reached the 10-run mercy rule for the inning. This was boring for everyone involved. Because players were swinging more when I umpired, the ball was put into play more, and the kids and parents had a lot more fun.

Your Experiences

I’d like to hear about your experiences with setting expectations. Have you found that setting expectations up front helps your projects? Have you received any resistance to setting expectations at the start of a project?

Tester Traits – Comfort with Cognitive Dissonance

I believe that software testers need to be comfortable with two conflicting ideas in order to best contribute to the success of a project. They need to be able to find and point out every defect in a given software application; however, they also need to keep in mind that the software can meet the business’ needs even if it is not perfect. The best software testers that I’ve worked with are comfortable with the cognitive dissonance caused by these two conflicting ideas.

Goal of Perfection

I’ve worked with some software testers who are great at finding defects, but they get so focused on the defects that they lose sight of the big picture. They seem to believe that the ultimate purpose of software is to be perfect rather than to meet a business goal. These software testers can cause a software project to be unnecessarily delayed by arguing that minor issues should prevent software from going live. They can also reduce end-user acceptance of a product by complaining to anyone who will listen that the software is subpar and by pointing out every known issue to end-users.

I believe that testers do need to point out every bug possible to the internal development team and ensure that the team knows the business implications of any bugs. However, good testers need to be comfortable with the fact that perfection is not ultimate goal for business software. Instead, software has some business purpose that does not require perfection. Once software goes live, I believe that a tester should champion the software to the end-users.

When I encounter a tester who has trouble accepting imperfect software, I remind them that most useful commercial software has known bugs. Often, some of these bugs are even listed in a “Read Me” file for end users to see. There is a real value to having less than perfect software available rather than having no software at all.

Goal of Delivery

Although I have seen testers who are too focused on perfection, I have also seen testers who are too focused on delivery of the software. These testers either are not detail-oriented enough to find critical bugs or they ignore the real business impact of bugs. These testers do not want to stand in the way of the delivery date under any circumstances. Project Managers often put enormous pressure on testers to sign-off on software that was not properly tested or has major bugs in order to meet a delivery deadline. However, the best testers will have the backbone to stand up to any pressures and point out the specific risks of going live.

In my opinion, it’s important that the tester understand the business goals well enough to point out the specific risks to key decision makers; however, I believe that it should not be up to the tester to decide whether or not software goes live. The ultimate decision should be made by management who understands the full scope of business needs and can weigh the quality risks against every other concern.

The Ponds

There’s a poem by Mary Oliver called The Ponds that I think captures the essence of this trait that software testers need to contribute to a successful project. Testers need to notice imperfections while still being “willing to be dazzled”. This version of the poem is from a book called New and Selected Poems by Mary Oliver published by Beacon Press in 1992. I hope you enjoy it and find it relevant.

Every year

the lilies

are so perfect

I can hardly believe

their lapped light crowding

the black,

mid-summer ponds.

Nobody could count all of them –

the muskrats swimming

among the pads and the grasses

can reach out

their muscular arms and touch

only so many, they are that

rife and wild.

But what in this world

is perfect?

I bend closer and see

how this one is clearly lopsided –

and that on wears an orange blight –

and this one is a glossy cheek

half nibbled away –

and that one is a slumped purse

full of its own

unstoppable decay.

Still, what I want in my life

is to be willing

to be dazzled –

to cast aside the weight of facts

and maybe even

to float a little

above this difficult world

I want to believe I am looking

into the white fire of a great mystery.

I want to believe that the imperfections are nothing –

that the light is everything – that it is more than the sum

of each flawed blossom rising and fading. And I do.

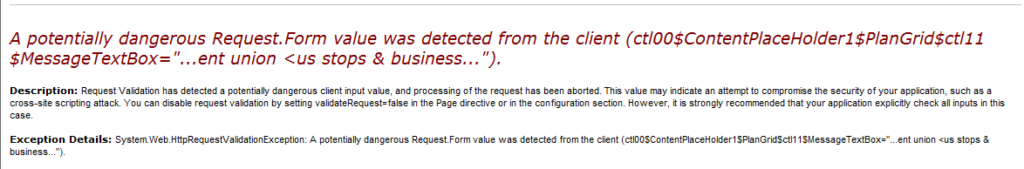

User Acceptance Testing Tales from the Trek – Writing a Perfect Bug

My early experiences running User Acceptance Testing (UAT) typically involved dealing with just 1 or 2 users. When they encountered an error, they’d show it to me, and I’d record it. However, as I gained experience in Quality Assurance, I worked on larger projects.

Training – How to write a bug

On my first larger project, I needed to train a group of 20 users who were going to perform User Acceptance Testing. Having worked with several different users in the past, I knew several of the pitfalls that users may encounter. However, I never really had asked users to record errors themselves. Still, I thought it would be easy to get the point across.

During the training session, I said that it was critical to record all necessary steps so that the developer could recreate the bug. I told the users that we often hear from users who simply say, “It doesn’t work,” and explained that the developers will not be able to fix a problem that was described so generically. The users said that they understood perfectly and were ready to start testing.

Bug Writing – Frustration

With 20 inexperienced testers working independently, it was important to filter the reported bugs before assigning them to the developers. I was responsible for triaging all bugs reported by the users. I verified that I could recreate bugs and eliminated duplicate bugs before assigning them to the developers. If I could not recreate a bug based on the instructions, I would reassign it to the user who reported it and ask for more detail. I assumed that the users would quickly realize when they were not providing sufficient detail and start writing perfect bugs after a day or two.

I assumed wrong.

Although a few users were writing clear, reproducible bugs, most were writing ones that were way too generic. For example:

- I was on the “Choose a Course” screen.

- When I chose a course, I got an error.

In this case, when I tried to reproduce the bug, I did not receive an error. So, I sent the bug back to the user requesting more detail. In most cases, the first time a bug was recorded, I had to reject it. In many cases, I had to reject the rewrite as well. Pretty quickly, the users got fed up with me and complained to their manager that I was rejecting their bugs.

Enlightenment

I realized that I needed to find a way to illustrate to the users exactly what was needed in a clear, reproducible bug report. I developed the following simple exercise that was very effective. I have used it on all subsequent large-scale UAT efforts with the same success.

I ask the users to do the following with their first 5 bug reports:

- Record the bug as clearly as you can and assign it to yourself.

- Wait 5 hours.

- Try to recreate the bug following only the information that you recorded.

- If you are able to recreate the bug, assign it to me. Otherwise, go back to step 1.

It is important that the users wait some amount of time before trying to recreate the bug. I found that when they try to recreate the bugs immediately, the steps are too fresh in their mind to truly follow only what they wrote. They seem to fill in the gaps without realizing it.

I have found that once users complete this exercise, they are able to consistently write bugs that have enough detail to be reproducible. They do not become expert testers, of course, but they are able to write a useful bug.

I’m interested in hearing about other people’s experiences as well.

What concepts have you found especially difficult to get across to people performing UAT for the first time?

Were you able to find a technique that helped the users better understand the concept?

User Acceptance Testing – Planning for Value

Throughout the years, I’ve led many user acceptance testing (UAT) efforts. There are a couple of common ways to plan the testing that I’ve found to be problematic. The methods allow someone to say they’ve planned UAT but add very little value. The end result is that unhappy users report problems after the software is live, and the project manager asks why they didn’t find the problems during UAT.

After years of experimentation, I found a method that adds a lot of value to the testing process with less effort than the other methods.

Method 1 – Users write UAT scripts

I’ve been involved with quite a few projects where the client wanted the users of the software to write the test scripts for UAT. This was often done to reduce development costs. The folks planning the project believed that they would save money by having business employees write the test scripts for UAT rather than have someone from the development team work on them. They also believed, often correctly, that the business folks understood the goals of the system best, so they were the most qualified to write the UAT scripts.

The problem with this is that most business users have more than a full time job already. In addition, most business users have never planned a testing effort or have written a test script. It’s difficult enough for business users to find time to execute tests, but nearly impossible for them to find time to plan testing. Even if they do find the time to plan it, their lack of experience results in test scripts that test very little.

Method 2 – Test Analyst writes UAT scripts

On other projects, I’ve been asked to write test scripts that the users can run during UAT. Usually, I’m asked for the same scripts that the test team ran during system testing. Under this scenario, it’s unlikely that the users will find any issues that the test team didn’t already find. This method doesn’t uncover functional issues that the test team missed. In addition, I’ve found that the business users rarely take the time to follow the test script closely and end up not doing much testing at all.

UAT Success Method – Guided Functional Checklists

The UAT planning method that I’ve found adds the most value with the least effort is Guided Functional Checklists. This method is quite simple:

- A member of the test team meets with each key user and asks what business goals they need to accomplish with the software.

- The test team member compiles the list into a spreadsheet that will be used for recording the test results.

That’s all there is to the planning. The result is an organized list of what should be tested based on the business goals of the users rather than based on the development team’s view of the project. Because this takes little of the users’ time, they are able to make time to complete the planning. By not having a detailed script, users are able to ensure they can meet their business goals in the way that they will in the field, which is often a bit different than the development team expects. This allows everyone to identify programming or training issues before going live.

Feedback

What UAT planning challenges have you faced? How did you address the challenges? I’d love to hear your tales from the trek.

Metric Misuse – Quality Assurance Metrics Gone Awry

I was reading through some posts from Bob Sutton, one of my favorite management gurus, and I ran across a post that contains one of my favorite Dilbert comic strips.

Bob Sutton’s post, as well as the comments that I made on his blog, reminded me of one of my favorite topics: misused Quality Assurance metrics.

Tying Quality Assurance Metrics to Financial Rewards – A Dangerous Game

“Treat monetary rewards like explosives, because they will have a powerful impact whether you intend it or not.” –Mary and Tom Poppendieck, authors of Implementing Lean Software Development: From Concept to Cash

Over the years, many people have asked me what Quality Assurance metrics they should use to evaluate employee performance. My advice is that Quality Assurance metrics should not be used directly to evaluate employee performance. The Dilbert comic strip may seem a bit extreme, but it’s exactly what happens when employee performance is based strictly on metrics. This is true regardless of whether monetary rewards are explicitly tied to the metrics or not.

In my comments on Bob Sutton’s blog, I mentioned three specific metrics that had unintended effects when used for evaluating employee performance:

- rewarding testers for the number of test cases they wrote resulted in poorly written test cases;

- rewarding testers for the number of bugs they found resulted in a high number of unimportant or duplicate bugs reported; and

- penalizing testers for bugs rejected by the test lead or development staff resulted in important bugs going unreported.

Many people think that they have the ability to write a set of metrics that can be used to unequivocally gauge the performance of a Quality Assurance professional, but I have not yet encountered a metric that couldn’t be manipulated to favor the employees.

(If the metric can’t be gamed, it probably isn’t under the control of the employees, so it wouldn’t be effective at driving behavior anyhow.)

Are Metrics Worthless Then?

Actually, metrics are a great tool for identifying coaching opportunities and potential problems. However, in order to get honest metrics, they shouldn’t be used directly for employee evaluations or employee rewards.

When I’ve looked at the metrics that I mentioned earlier with an eye towards coaching, I had excellent results.

- Reviewing the number of test cases written helped me identify a tester on my team who was putting much more detail than I wanted into his test cases. After some coaching, he was able to consistently meet my expectations.

- Reviewing the number of bugs found by each tester helped me identify a tester who was digging into the root cause of the most difficult to reproduce bugs. She didn’t report as many bugs as others, but her work was critical to getting a great product out the door in a timely manner. It turned out that she was the most skilled tester even though she reported the fewest bugs.

- Reviewing the number of bugs rejected by the development staff helped me identify a manager who was evaluating his programmers based solely on the number of valid bugs found in their code. The developers were motivated to simply mark bugs as invalid rather than fix the bugs. This insight allowed me to address the problem directly with that manager.

Good Quality Assurance metrics provide powerful tools for managing a Quality Assurance team when used properly. However, they shouldn’t be used in a vacuum. They should just be considered one data point among many.

I was only able to scratch the surface of this topic in this blog post. I plan to discuss specific metrics in future blog posts. In the meantime, if you want to read a much more in-depth review of the pros and cons of employee incentives, you can find one paper here.

Your Experiences

I know that a lot of people feel passionately about Quality Assurance metrics, both pro and con. I’m very interested to hear about your experiences with Quality Assurance metrics. Have you found any that were particularly useful? Have you found any that had unintended consequences?